Table of Contents

1. Executive Summary

Healthcare is under strain. Staff shortages, higher costs, and too much admin work are putting pressure on organizations everywhere. In this climate, AI adoption in healthcare is moving from theory to practice. It’s not a distant idea anymore but a set of tools that can ease workloads, cut expenses, and help patients get faster access to care.

To understand how leaders see this shift, QuickBlox surveyed over 100 professionals. The group included frontline physicians, medical directors, senior executives, and innovators from hospitals, clinics, startups, and academic centers. The mix gives us both the perspective of those making decisions and those who live with the outcomes day to day.

Main Takeaways

- AI adoption is picking up. Over 70% of organizations say they’ll invest within the next year. Around 10% already have.

- Priorities are pragmatic. Workflow automation, virtual assistants, and operational analytics come out on top — areas where results can be measured quickly.

- Top motivators:

- Efficiency (81%)

- Cost savings (65%)

- Better patient care (63%)

- Barriers remain. Data privacy and security concerns lead, followed by integration complexity, costs, and lack of staff training.

What This Means

Healthcare leaders are moving forward, but carefully. Most are starting small with back-office tools that carry less risk and show early ROI. Only after building confidence do they plan to expand into predictive analytics and, eventually, clinical decision support.

The message is straightforward: AI in healthcare and medicine is no longer about hype or experimentation. It’s about practical, ROI-focused decisions that reduce burden, protect data, and build trust for the future.

2. Introduction: Why AI in Healthcare Now

Healthcare is under pressure from every direction. Patient numbers keep rising. Staff numbers don’t. Costs go up, and so do expectations. Since COVID, digital systems have spread quickly, but they’ve also raised the bar. People now expect quicker access, easier online options, and more personalized care. Providers are being told to deliver all of this with fewer resources.

In this setting, AI in healthcare has started to move from idea to practice. It’s not just theory anymore. Hospitals and clinics are using it in small but real ways — cutting down on paperwork, helping with scheduling, running predictive models to spot risks earlier. These early uses are building confidence. But worries haven’t gone away. Privacy, regulation, and the challenge of fitting AI for health into old legacy systems are still on everyone’s mind.

Why This Survey Matters

This survey is different because it pulls in voices from all sides:

- Doctors and medical directors who see the day-to-day pain points.

- Executives who sign off on budgets and strategy.

- Academics and innovators who are testing new tools.

It’s not just the top or the bottom of the system talking — it’s both. That makes the results more grounded.

What Comes Next

The picture that emerges is pragmatic. Efficiency and cost savings lead the way. Patient care improvements follow close behind. This white paper distills those insights into a roadmap — showing where AI is being applied now, the barriers holding it back, and what healthcare leaders should focus on first.

This white paper captures diverse perspectives on AI adoption, reflecting how different professionals are using or planning to use AI in medicine and healthcare.

📌 Callout Box: The AI Tipping Point

2026 will mark a turning point for AI and healthcare, where generative AI in healthcare and automation tools will become integral parts of operations

- AI is moving from pilot projects to scaled deployments.

- Early wins are proving ROI within 6–12 months.

- Workforce shortages and administrative burdens are accelerating urgency.

- Patients increasingly expect digital-first care experiences.

- Executives now view AI as strategic infrastructure, not an experiment.

3. Methodology & Respondent Profile

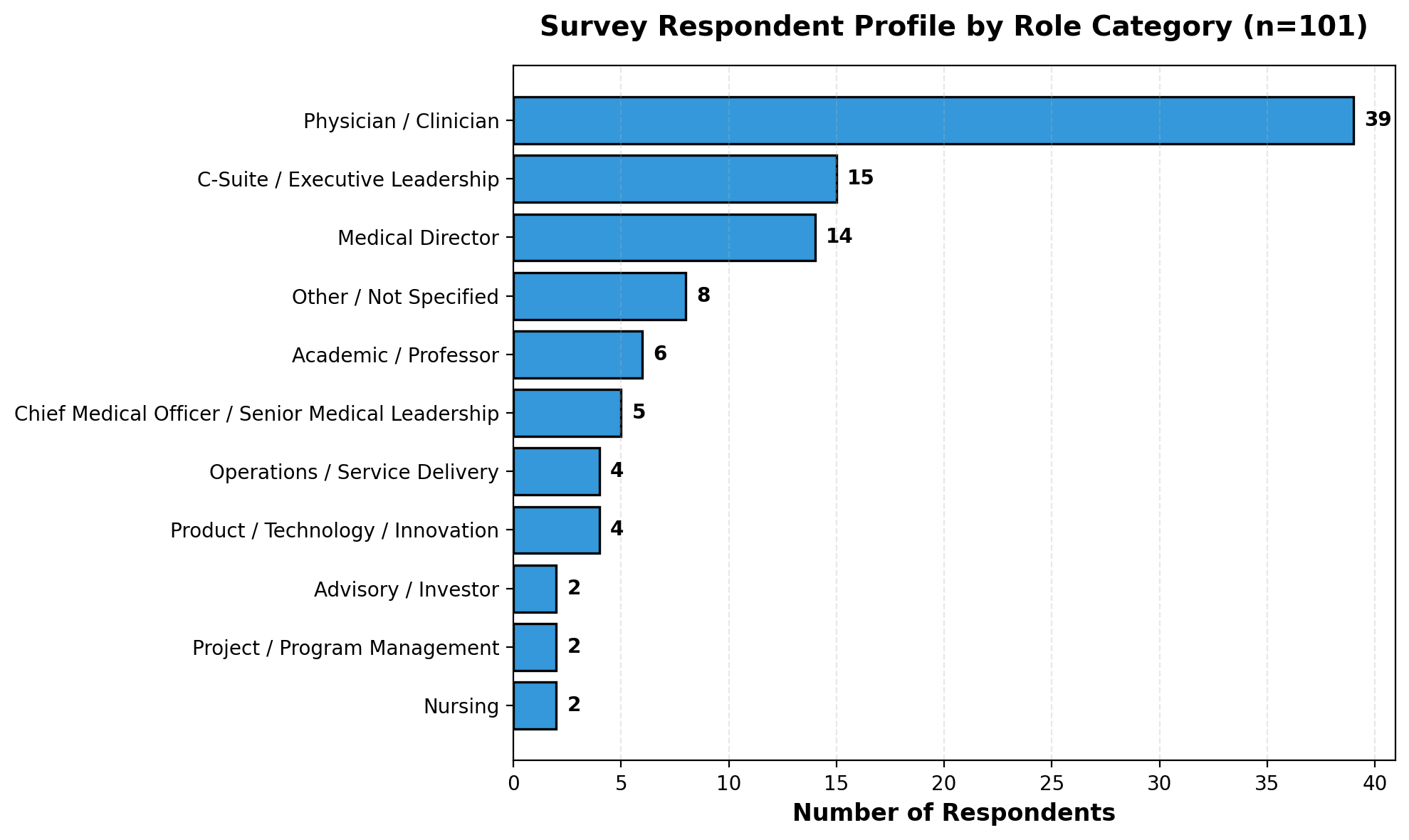

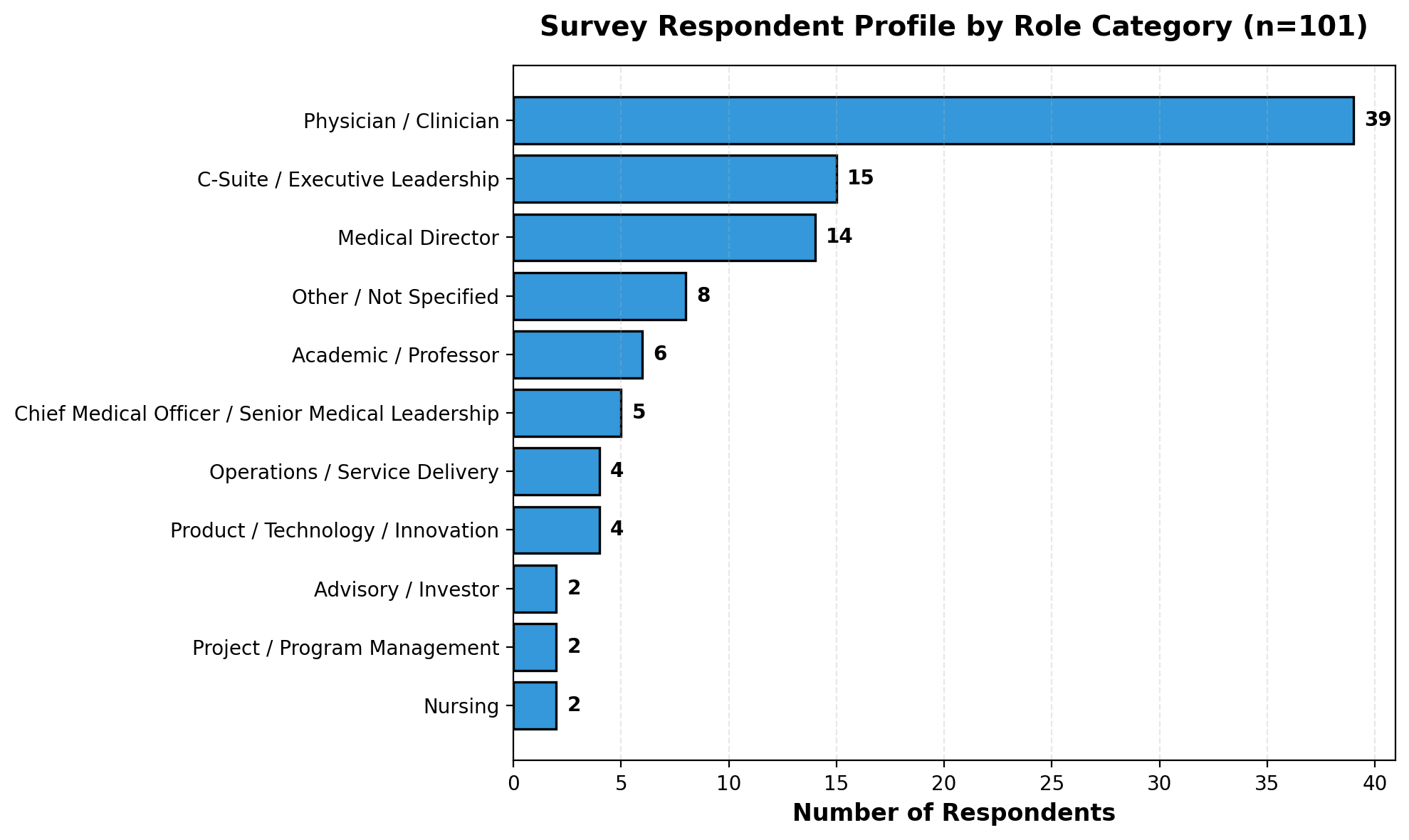

To get a real sense of how healthcare organizations are looking at AI, QuickBlox ran an online survey. We heard from 101 professionals across the industry. The survey was built to capture a mix of views — clinical, executive, and operational — so the results reflect both what’s happening on the ground and what’s being decided in the boardroom.

Who Responded

- Physicians and clinicians (~40%) — working across emergency, cardiology, psychiatry, family practice, and more.

- Executives (15%) — CEOs, founders, senior leaders with budget power.

- Medical directors (14%) — bridging day-to-day care with operational oversight.

- Chief medical officers & senior leaders (5%) — setting clinical strategy across systems.

- Academics/professors (6%) — bringing a research-driven view.

- Product, tech, and operations leads (10%) — often the early adopters, pushing new tools.

- Other roles (12%) — nursing, project managers, advisors, investors.

These respondents represent the full spectrum of AI in the medical field — from research to clinical application.

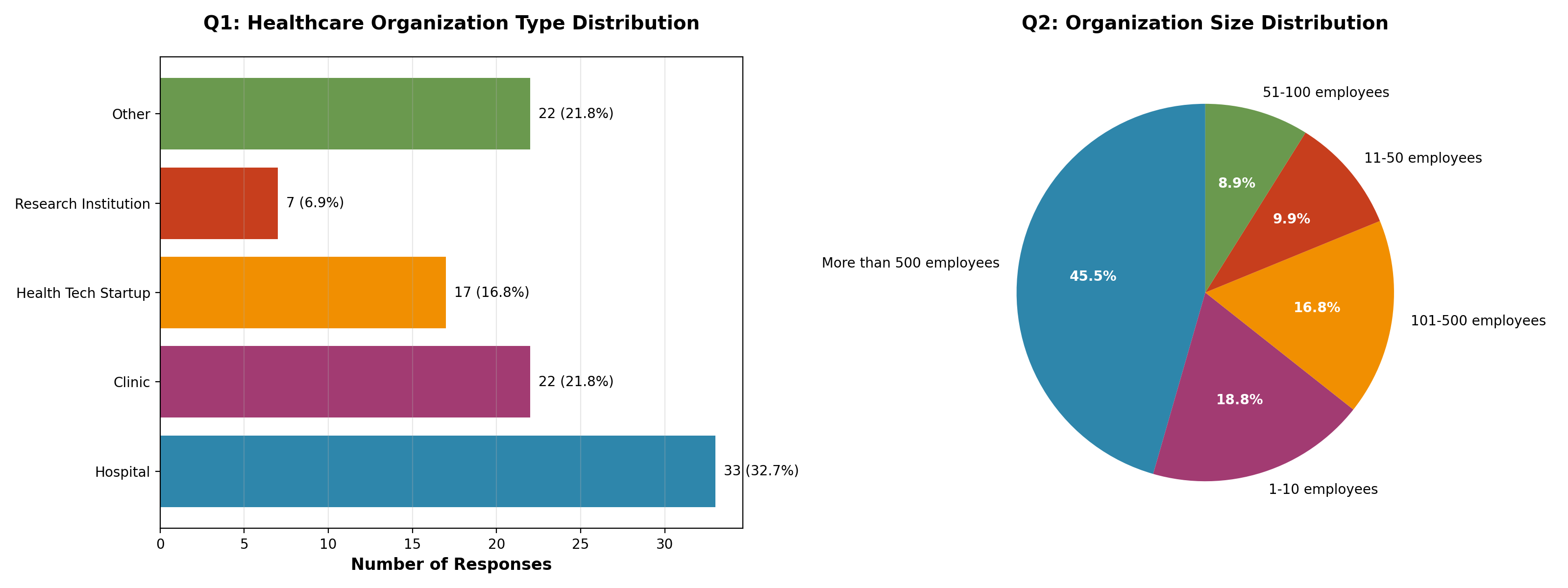

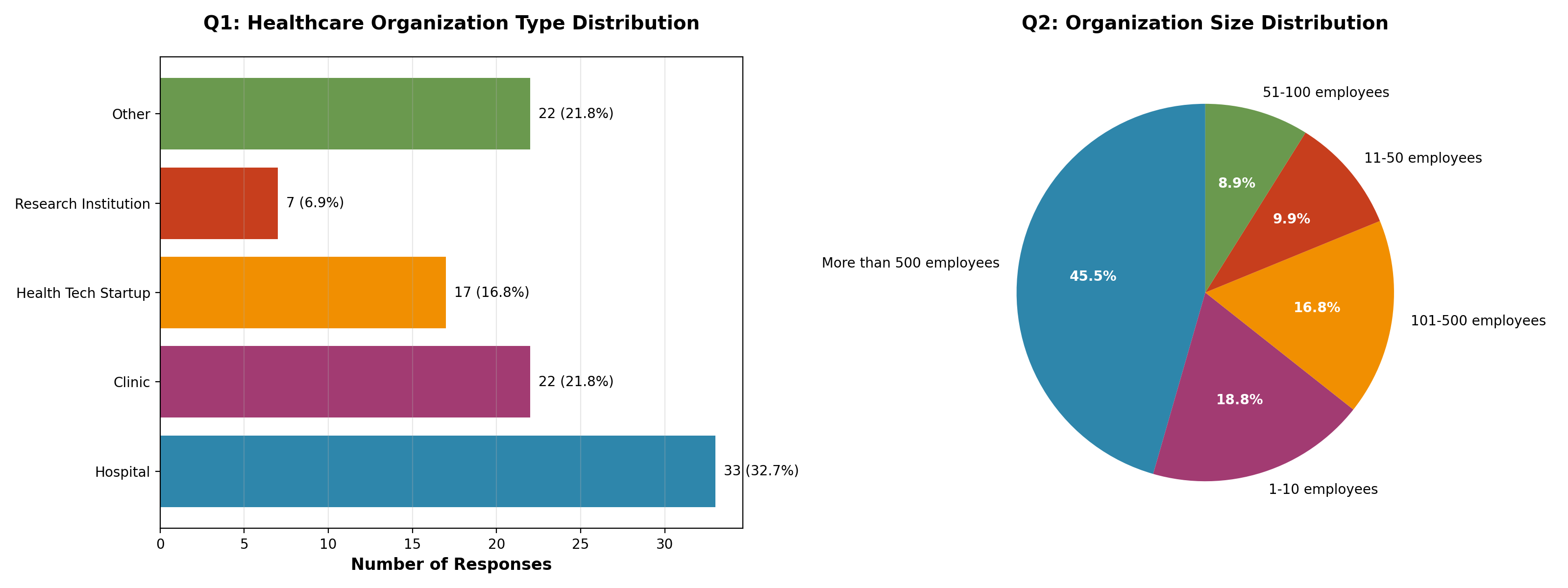

Organization Types & Sizes

- Hospitals (33%) and clinics (22%) made up more than half of the sample.

- Startups (17%) and research institutions (7%) added innovation and academic voices.

- By size: about 45% large enterprises (500+ staff), 36% mid-sized, and 19% micro groups with 10 or fewer employees.

Why This Matters

This spread gives weight to the findings. Over half the responses came from physicians and medical directors, so we hear from those living with AI in practice. At the same time, almost 1 in 5 respondents were executives with budget control. Put together, it’s a snapshot that covers both daily realities and top-level decisions.

4. What’s Driving AI Adoption

When asked why they are pursuing AI, healthcare leaders were strikingly consistent: operational efficiency, cost savings, and improved patient care form the foundation of adoption decisions. Together, these three drivers create a powerful “business case triangle” for AI in healthcare.

The Efficiency-First Imperative: Why AI use in healthcare starts with operations

The most common response by far was operational efficiency, chosen by 81% of respondents. Healthcare organizations are overwhelmed by administrative work—documentation, scheduling, prior authorizations, billing—and see AI as a way to free up scarce clinical time. For many, efficiency is not simply about speed but about survival: finding ways to do more with constrained staff and budgets.

Cost and Care in Balance

Close behind, 65% cited cost reduction as a critical motivator. Rising labor, technology, and compliance expenses are forcing providers to look for sustainable savings. AI’s promise of reducing repetitive tasks and optimizing workflows directly addresses these financial pressures demonstrating the use of AI in healthcare to improve both efficiency and outcomes. Importantly, 63% also highlighted improving patient care. Leaders recognize that technology must not only cut costs but also translate into better access, faster responses, and higher quality care.

Innovation Takes a Back Seat

Interestingly, only 33% cited “innovation” or staying competitive as a top driver. This shows healthcare organizations are pragmatic: they want AI to deliver measurable ROI, not just cutting-edge features. In other words, executives are less interested in experimenting with the latest algorithms than in solving the everyday problems of overburdened clinicians and frustrated patients.

Implication for Executives

Successful AI initiatives will be those that balance efficiency, cost, and patient outcomes simultaneously. Organizations are not willing to choose one dimension at the expense of another. For healthcare executives, the message is clear: lead with use cases that save time, reduce costs, and demonstrably improve care delivery.

5. Top Concerns: What Holds AI Back

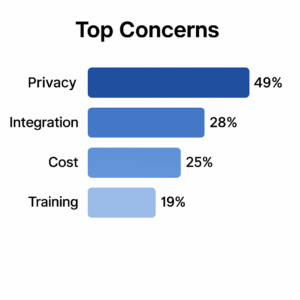

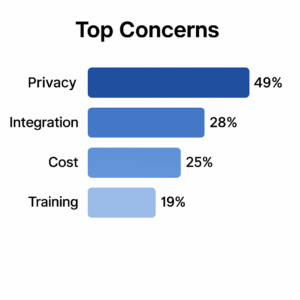

There’s plenty of excitement about AI, but leaders aren’t blind to the roadblocks. The survey surfaced a mix of technical, financial, and human challenges that could slow adoption if they’re not dealt with. Despite the clear benefits of AI adoption in healthcare, leaders remain aware of the obstacles.

Security Comes First

The top issue is data privacy and security, flagged by almost half of respondents. With so much sensitive patient information at stake — and strict HIPAA rules to follow — the risk of breaches or compliance failures is always front of mind. A few recent data leaks in the industry have only made people more cautious. In practice, this means leaders know any AI rollout has to start with a security-first mindset.

Integration and Cost Pressures

Next on the list were integration headaches (28%) and high costs (about a quarter to a third of respondents). Many organizations still depend on patchy, legacy EHR systems that don’t play nicely with new tools. Even when a solution looks good, the real bill — customization, training, ongoing support — often ends up higher than expected. That’s why leaders are pushing for clearer pricing and phased rollouts that can prove ROI before big money is spent.

The Human Factor

Around 1 in 5 pointed to staff training as a barrier. This highlights something often overlooked: adoption is as much about people as it is about tech. Without proper training and change management, clinicians may push back or use tools incorrectly. Interestingly, vendor reliability ranked lowest (11%) — meaning the bigger worry isn’t whether vendors can deliver, but whether organizations themselves are ready to absorb the change.

What This Means

If these basics aren’t handled, adoption stalls. Security and compliance can’t be optional, integration support has to be part of the deal, and staff training should be treated as core infrastructure, not a side note.

6. Where AI Will Have the Greatest Impact

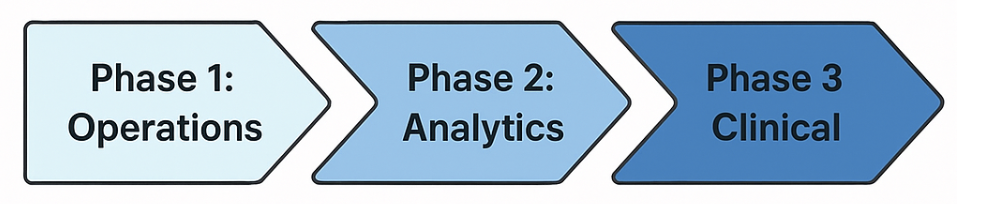

One of the strongest signals from the survey is that healthcare leaders see AI’s biggest value in operations and back-office work, not frontline clinical care. The approach is pragmatic: start with lower-risk tools that show results quickly, then build toward more complex uses later.

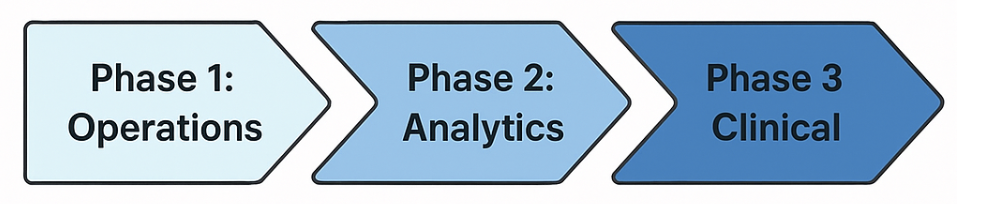

Phase 1: Operations and Administration

The top picks were operational efficiency (68%) and administrative tasks (62%). This means automating paperwork, scheduling, and billing — the kind of repetitive work that eats up staff time. The payoff isn’t glamorous, but it matters: freeing up clinicians so they can focus more on patients instead of forms.

Phase 2: Predictive Analytics

Next comes predictive analytics (49%). This sits in the middle — not purely admin, not fully clinical. Leaders see it as a bridge technology, offering insights like spotting readmission risks or flagging patients who need closer monitoring. It adds value without making direct treatment decisions, which helps organizations trust it more.

Phase 3: Clinical Applications

Direct care uses — things like medical AI diagnosis (37%), triage (34%), and patient engagement (31%) — ranked much lower. The gap of 30+ points shows caution. Concerns around trust, regulation, and safety are slowing the shift into clinical roles. Most leaders are clearly taking a crawl-walk-run path.

What This Means

The order is clear: start with back-office efficiency, layer in predictive analytics, and only then push into clinical AI once the foundations — governance, data, trust — are stronger. That’s how early ROI can fund and pave the way for future transformation.

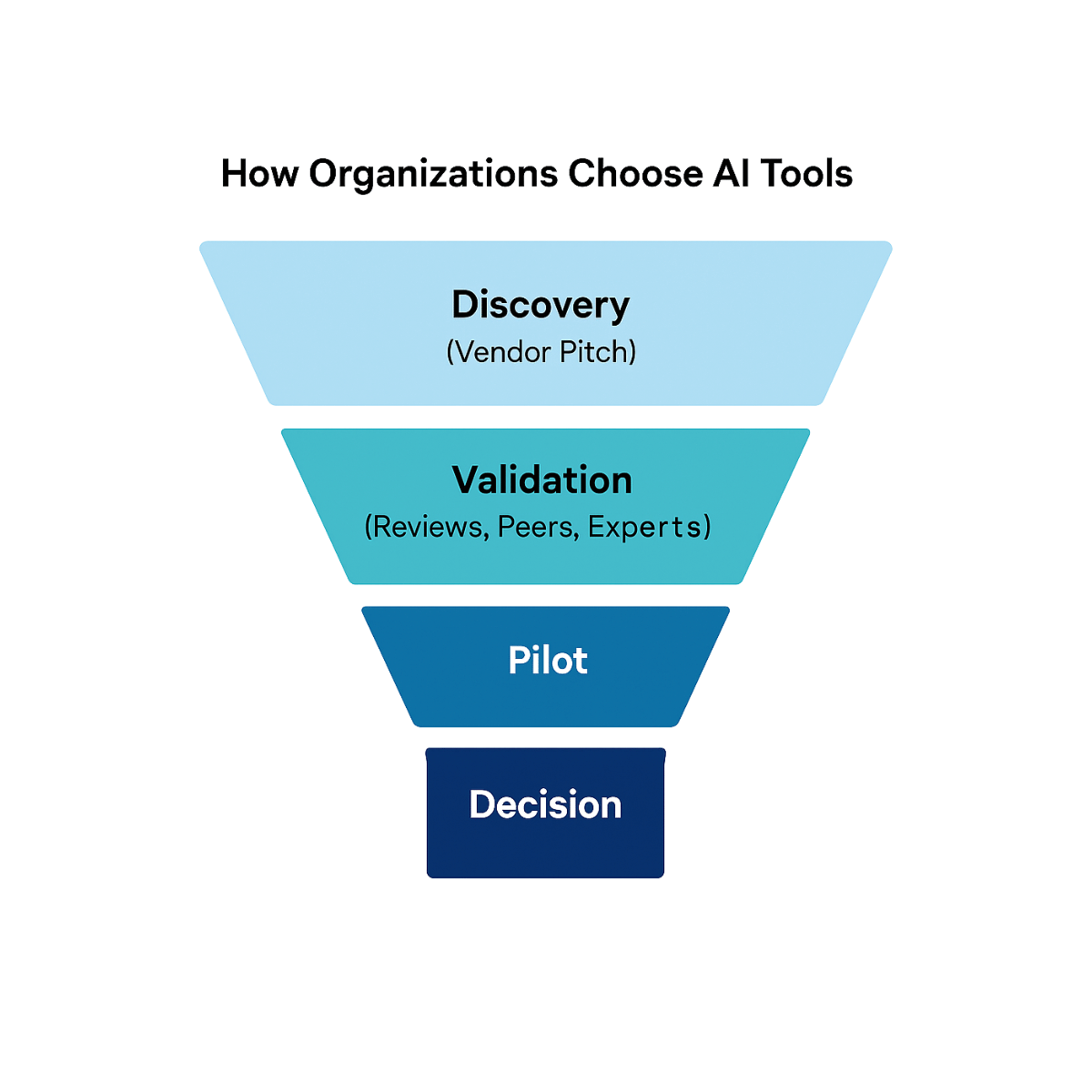

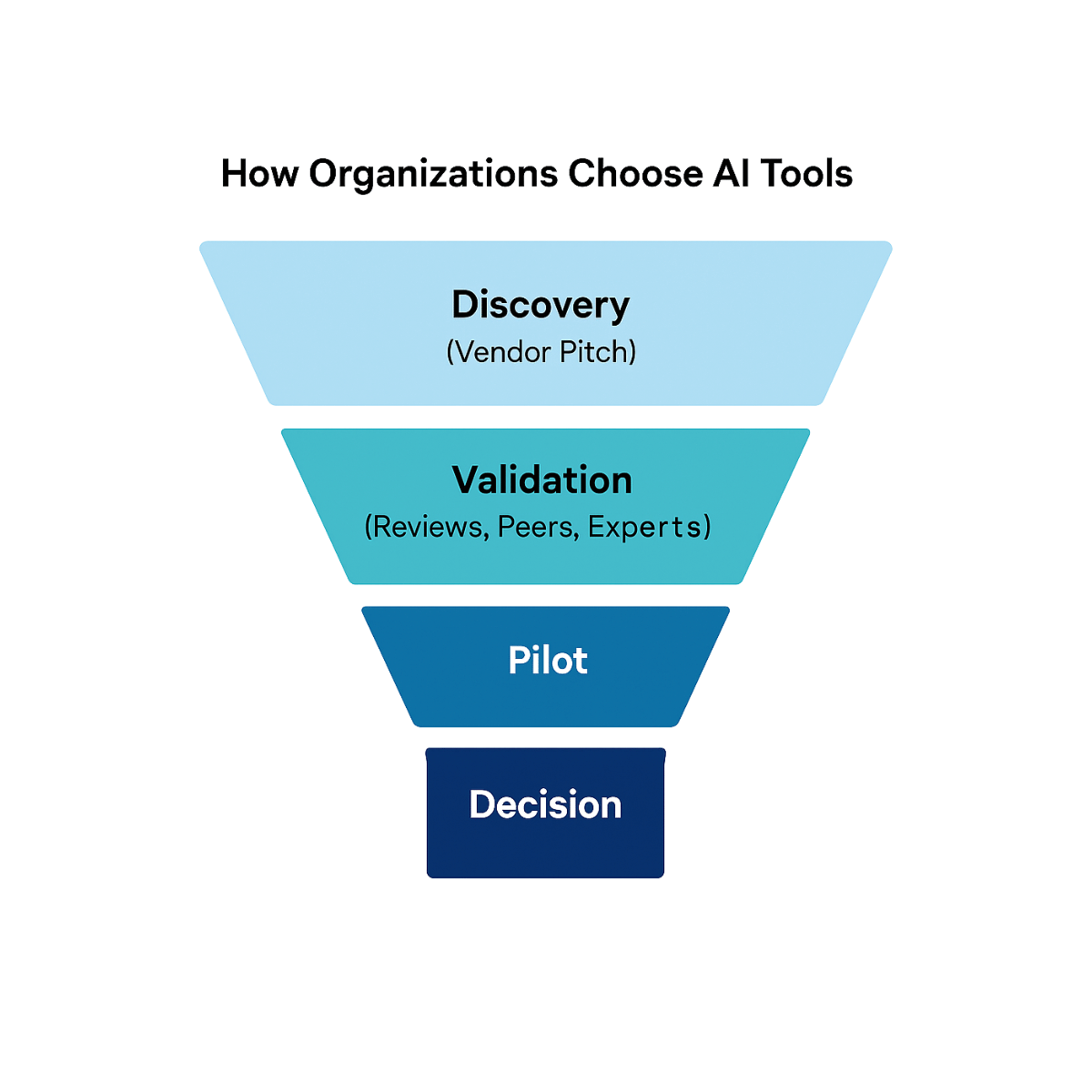

Picking the right AI tools isn’t something healthcare leaders rush into. The survey shows most organizations want proof — from pilots, peers, and independent sources — before they commit to a vendor. Choosing the right health AI solutions requires both evidence and trust.

Try Before You Buy

The clear favorite method is pilots, chosen by 73% of respondents. This fits the cautious nature of healthcare. Leaders want to see how a tool works in their own setting, with their data and systems, before rolling it out more widely. Pilots give them a way to test the waters and avoid big, risky investments.

Other Ways Leaders Check Value

Pilots aren’t the only filter. Many respondents also mentioned:

- Independent research and reviews (57%)

- Peer recommendations (52%)

- Expert consultations (49%)

These numbers show decision-making is rarely based on one source. It’s layered, cautious, and often collaborative.

Sales Presentations Don’t Carry Much Weight

Only about a quarter (24%) said they lean on vendor presentations. That suggests a level of skepticism: leaders don’t want marketing slides, they want proof. Vendors relying only on demos or polished pitches are unlikely to convince serious buyers.

What This Means

For executives, the best way to separate hype from value is to lean on pilots and peer networks. For vendors, the lesson is simple: make products pilot-friendly, share hard evidence, and back it up with real case studies.

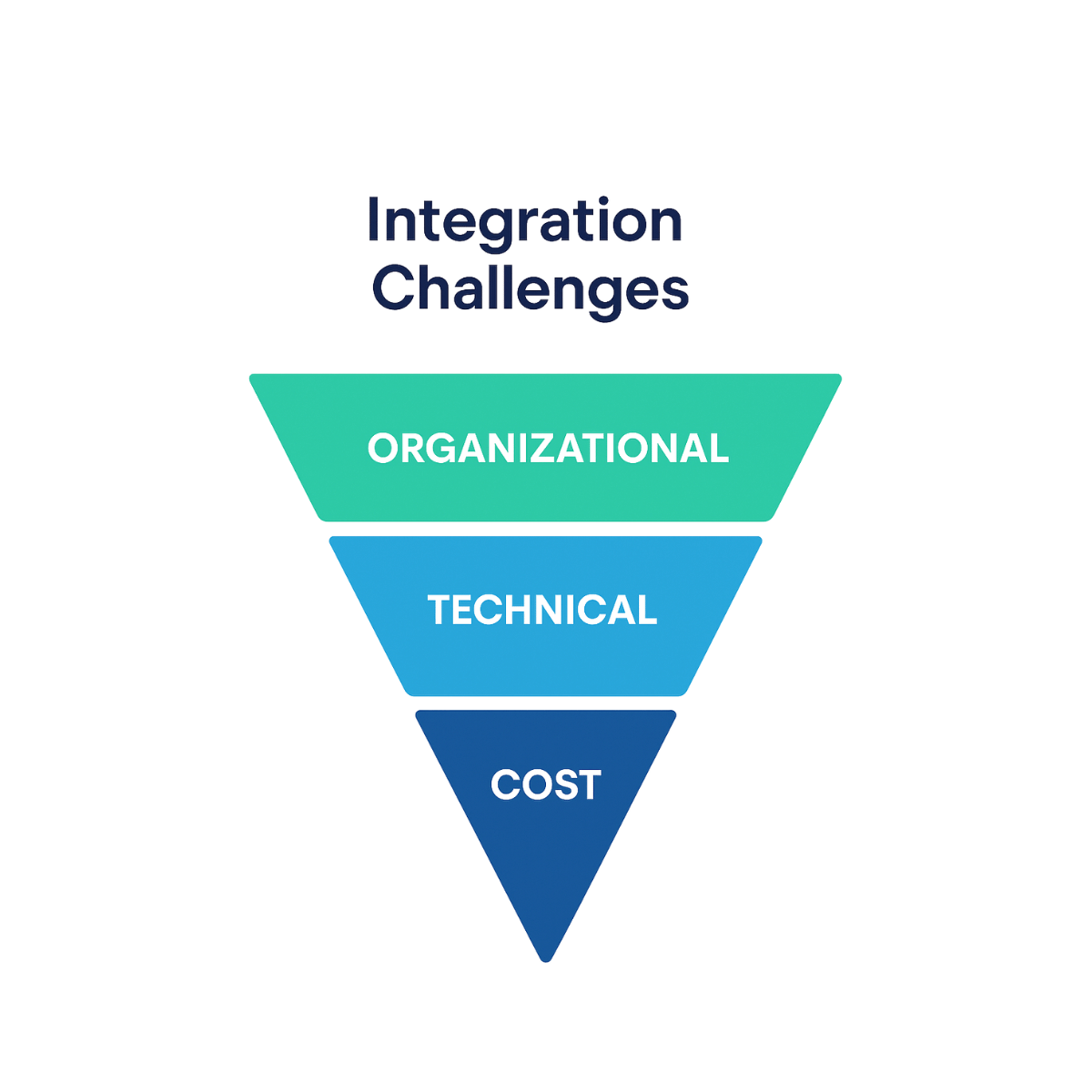

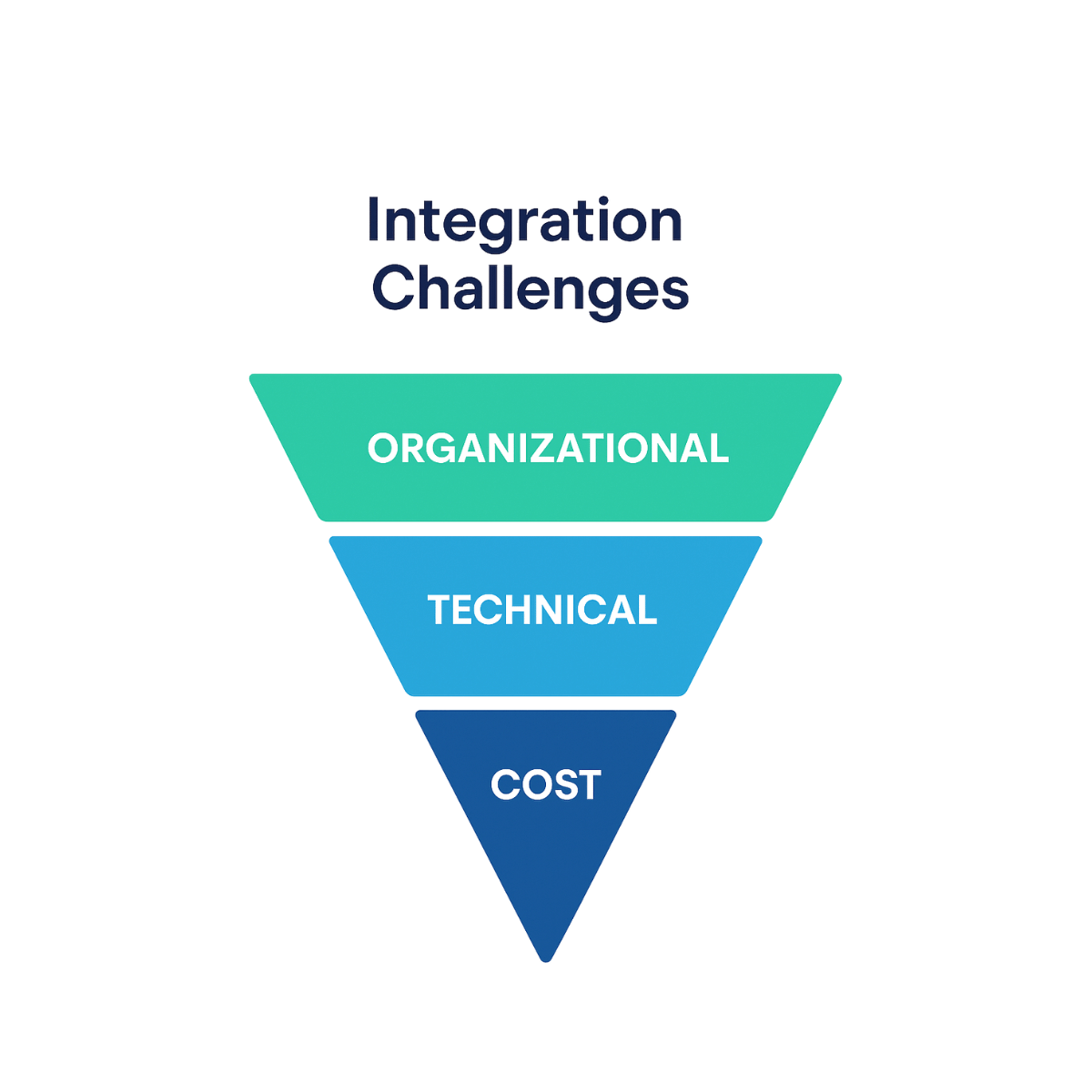

8. Integration Challenges

Even with enthusiasm for AI, moving from interest to actual use isn’t straightforward. The survey shows that organizations run into a mix of financial, technical, and organizational barriers once they try to integrate AI into their existing systems.

Cost Is Still the Biggest Hurdle

About 35% pointed to high costs as the main challenge. Pilots might look affordable, but once you add customization, integration support, training, and maintenance, the price tag grows. This gap between expectation and reality often slows projects down. Leaders are asking for more realistic budgets and clearer pricing from vendors.

Technical Barriers

Roughly 1 in 5 respondents highlighted technical issues. Many healthcare systems still rely on fragmented or outdated EHR platforms, and plugging in new AI tools isn’t simple. A solution might work in a small pilot, but scaling it across a big hospital network usually needs extra expertise and investment.

Organizational Roadblocks

Nearly 44% mentioned organizational barriers — limited resources, weak leadership backing, or staff pushback. These can stall adoption even when the tech itself works fine. Without executive sponsorship and clear support, projects risk being shelved.

What This Means

Integration challenges often show up in order: first the cost, then technical fit, then organizational buy-in. To succeed, leaders need to plan for all three — budgeting with a cushion, securing technical expertise, and engaging staff early. Vendors can play a role too, by offering flexible deployment options and being upfront about the real cost of ownership.

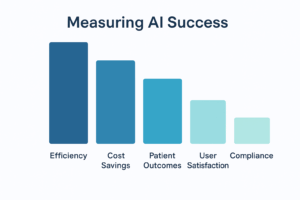

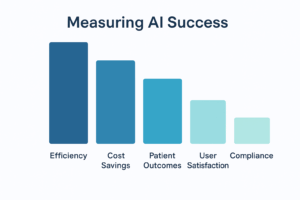

9. Measuring AI Success

How do healthcare organizations judge whether AI is actually working for them? The survey points to one clear answer: efficiency. That’s the main yardstick, with cost savings and patient outcomes not far behind.

Efficiency Above All

Almost 9 out of 10 respondents (86%) said they measure success by efficiency gains. That lines up with efficiency also being the top reason for adopting AI in the first place. Leaders expect tools to cut admin time, speed up tasks, and give staff more space to focus on care. These improvements often set the stage for other benefits to follow.

Costs and Care Still Matter

Beyond efficiency, leaders look at:

- Cost savings (64%) — a way to prove the numbers add up.

- Patient outcomes (61%) — showing that AI doesn’t just help the bottom line but also improves care delivery.

Taken together, these three form a kind of “triple bottom line”: smoother operations, lower costs, and better care.

What’s Missing

Some measures don’t get as much attention. User satisfaction (37%) lags, even though staff frustration often leads to resistance. Compliance (19%) is even lower, possibly because it’s treated as a given. Ignoring these areas could mean blind spots that come back to bite later.

What This Means

If success is judged only by efficiency, leaders risk missing the bigger picture. A stronger mix of metrics — covering staff, patients, finances, and compliance — will give a fuller view of whether AI is delivering real, lasting value. To measure the real-world value of AI in the medical field, organizations must consider efficiency, cost, and patient outcomes together.

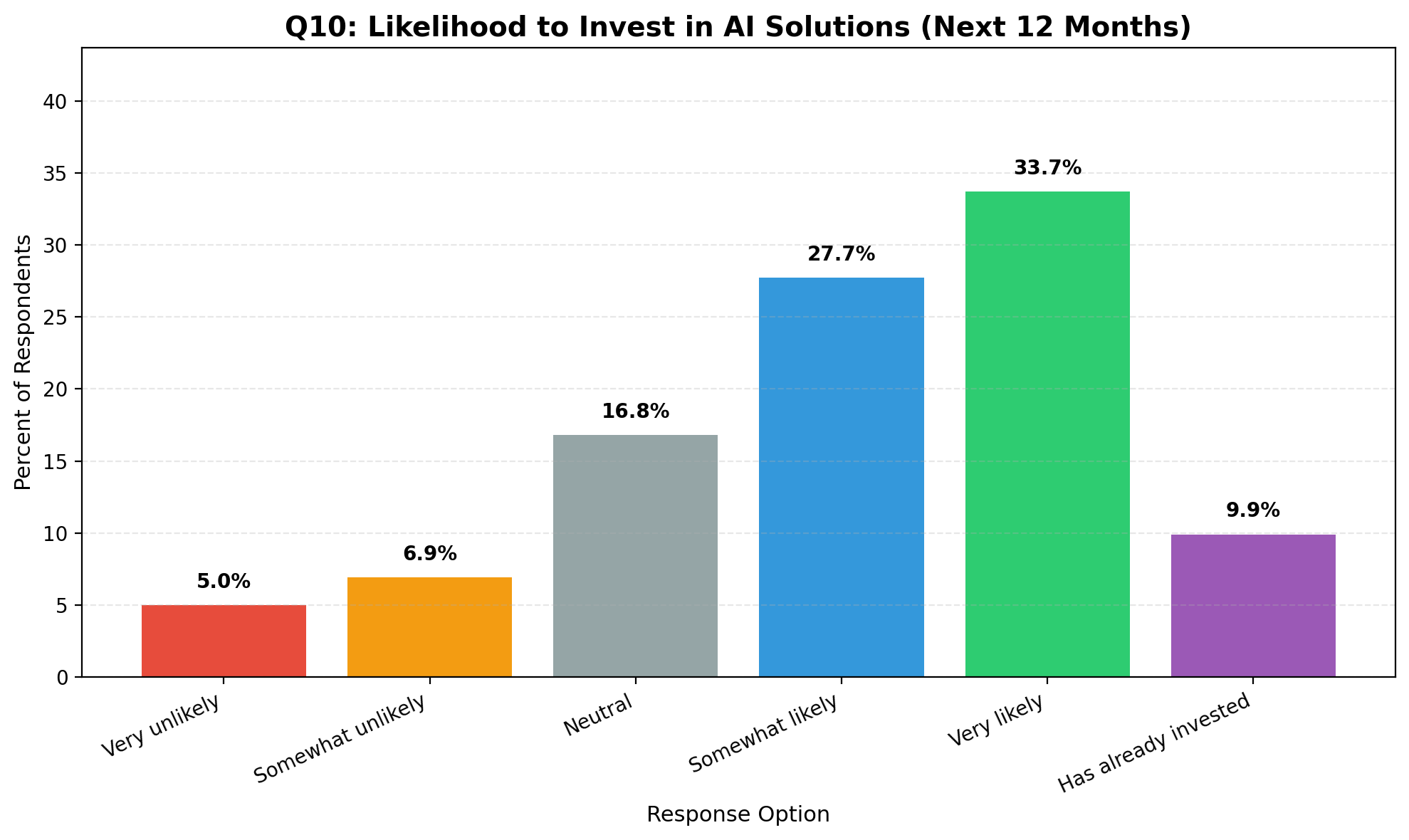

10. Investment Outlook

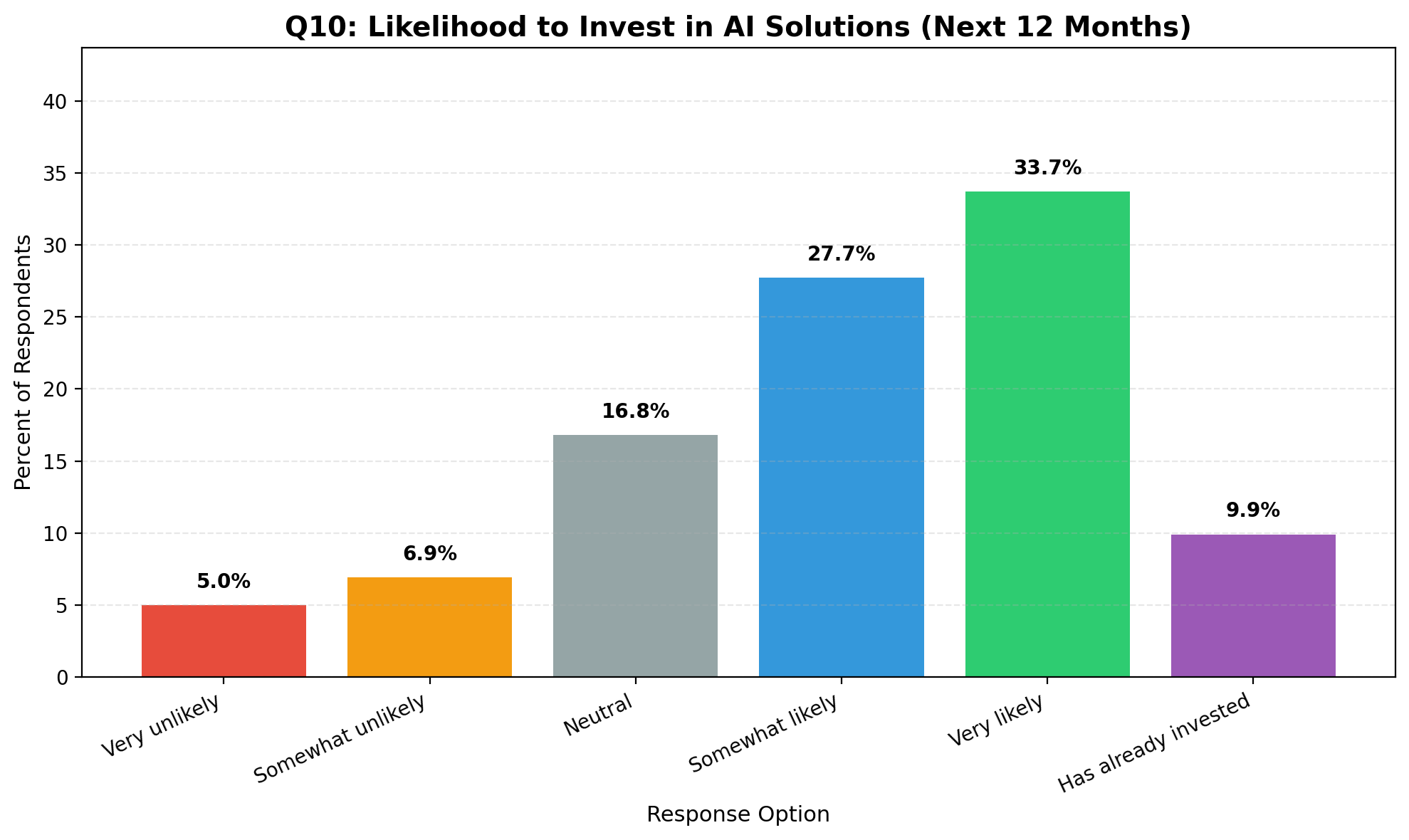

The survey points to strong momentum for AI in healthcare. More than 7 in 10 organizations say they’re either planning to invest in the next year or already have. That’s a clear sign the field is moving from experimentation into real execution.

Appetite for Investment

- 61% said they are “likely” or “very likely” to invest soon.

- About 10% already have, putting them ahead of the curve.

- Only 12% said they are unlikely to invest, showing very little resistance at the strategic level.

Step by Step, Not All at Once

Most respondents land in the “likely” group rather than “very likely.” That suggests a cautious optimism. Leaders are interested in AI but want to move carefully — piloting, testing, and proving ROI before committing to large-scale rollouts.

What This Means

The climate looks like a tipping point. AI is no longer treated as a side project. It’s becoming part of mainstream strategy. For executives, the task now is to budget smartly — fund the near-term wins like workflow automation and virtual assistants, while keeping an eye on more advanced tools, such as predictive analytics and patient-facing applications, over the next year or two.

The next 12 months could define how AI for health scales from pilot projects to enterprise-wide deployments.

11. What to Fund Now vs Next

The survey shows a clear order of priorities when it comes to AI investment. Leaders want quick wins first — projects that deliver ROI and ease workloads — while laying the foundation for bigger, riskier moves down the line.

Fund Now: Quick Wins

The top pick is workflow automation (74%). That means tools for documentation, scheduling, billing, prior authorizations — all the admin tasks that weigh down staff. Next come virtual assistants (47%) and operational analytics (45%). These carry lower regulatory risk, deliver results within 6–12 months, and help organizations build momentum early.

Incubate Next: Engagement and Analytics

Looking a step ahead, leaders are preparing to invest in patient engagement platforms (48%) and predictive analytics (46%). These promise better outreach and more data-driven insights. But they demand stronger governance, better data pipelines, and collaboration across teams, so the payoff is usually longer-term (12–24 months).

Stage-Gate: Clinical AI

Diagnostic support (47%) shows potential but is treated with caution. Regulatory hurdles, validation requirements, and safety concerns slow things down. Most leaders expect to test these tools carefully through pilots before thinking about wider adoption.

What This Means

The playbook is clear: fund automation now, incubate engagement and analytics next, and approach clinical AI step by step. Early ROI from admin-focused tools creates both the trust and the budget needed to move toward bigger transformations later.

12. Resource Priorities for Successful AI Adoption

The survey shows that success with AI doesn’t really hinge on algorithms. It depends on people, data, and the systems wrapped around them. Leaders pointed to three main tiers of priorities that need attention if AI is going to deliver real outcomes.

Top Priorities: People and Data

The biggest gap is talent and skills. And it’s not just data scientists. Organizations need clinical informaticians, product managers, and those “in-between” roles that can translate technical systems into something that works in day-to-day care. Just as important is data infrastructure and governance. AI is only as strong as the data behind it. That means clean, connected, compliant data pipelines with clear ownership and standards are essential.

Middle Tier: Integration and Security

The next set of needs is about fit and trust. Leaders talked about change management and workflow integration — making sure AI tools actually help staff instead of adding one more layer of work. And of course, security and privacyare never far from mind. Building privacy-by-design and compliance guardrails into every deployment is a must.

Third Tier: Validation and Vendor Support

Lower down the list but still critical are clinical sponsorship and validation. When respected clinicians back a tool, adoption becomes much easier. Vendor support and funding matter too, but several respondents noted money alone won’t fix weak foundations.

What This Means

AI in healthcare isn’t mainly a technology challenge. It’s a people-and-data challenge first. Skilled teams, strong governance, and trusted clinician champions are the real foundations for scaling AI. These findings reinforce that AI in medical research and operations will only succeed if organizations invest in people and data as much as in algorithms.

13. Strategic Recommendations for Healthcare Executives

The survey shows two things at once: momentum and hesitation. Most leaders want AI, but they know the barriers are real. For executives, the way forward is about mixing small, practical steps with longer-term bets.

1. Start Small, Prove It Works

Don’t try to do everything at once. Begin with tools like automation for scheduling, billing, or documentation. They’re low risk and usually pay off within months. Quick wins help free up budget and win over skeptics.

2. Fix Data and Governance Early

AI falls apart if the data is messy. That means putting effort into clean pipelines, good standards, and privacy safeguards now, not later. Good data governance is the foundation of any AI in healthcare initiative. Leaders who ignore this will struggle when they try to scale.

3. Manage the Human Side

AI adoption isn’t just tech — it’s people. Without training and change management, projects stall. Involve staff early, track how they’re actually using the tools, and adjust.

4. Get Clinicians on Board

If clinicians don’t trust the system, it won’t fly. Find respected champions to validate outcomes and vouch for safety. Build in feedback loops and show transparency.

5. Use Vendors, But Don’t Depend on Them

Vendors help you get moving fast. But leaders should avoid lock-in. Pick solutions with open interfaces and grow internal teams who can support and adapt the tools over time.

6. Budget Beyond the Launch

Buying the system is the easy part. The harder (and often forgotten) part is maintenance, retraining, and updates. If you don’t budget for that, the system slowly breaks down.

The Bottom Line

Early wins in operations build confidence. Strong data and skilled people provide the base. From there, leaders can move carefully into clinical AI — without overpromising or overextending.

14. Conclusion

This survey shows that AI in healthcare has hit a real shift point. This reflects the broader momentum of AI adoption across the medical field, from admin support to predictive analytics. It’s not just pilots or small tests anymore. AI is starting to show up in everyday operations — in hospitals, clinics, even startups. The push is driven by clear goals: making work more efficient, saving money, and improving patient care. That’s why tools like workflow automation and virtual assistants are getting attention first.

Still, the road isn’t smooth. Leaders worry about privacy, system fit, and whether staff are ready. What matters most isn’t the latest algorithm but the basics — good people, good data, and good processes.

For executives, the playbook looks like this:

- Go for small, low-risk wins first.

- Clean up your data and build the right teams early.

- Involve clinicians so they trust the tools.

- Keep the long game in mind — pilots are only step one.

About This Survey

This survey was run by QuickBlox, a company building secure chat, video, and AI tools for healthcare. We focus on HIPAA-compliant communication that matches many of the needs leaders shared here — from automation that cuts admin time to AI assistants that support staff and patients.

Our platform helps organizations go beyond pilots by offering ready-made, customizable solutions that fit into real clinical workflows. In simple terms, we give healthcare teams the tools to act on the priorities this survey has uncovered. QuickBlox continues to explore how AI and healthcare intersect to improve communication, security, and patient engagement.